在开始学习k8s前,需要部署一套k8s系统,部署k8s主要有2种方式(我知道的,可能更多),一种是通过二进制部署,另外一种是通过kubeadm进行部署,这里我们使用kubeadm进行部署。

硬件准备

这里我想通过kubeadm部署一个多master的k8s集群,这也更加符合生产环境的需要。更多的k8s的基础知识不想多做赘述。

服务器及IP

| Hostname |

Role |

Ip |

| k8s-m1 |

master1 |

172.16.26.81 |

| k8s-m2 |

master2 |

172.16.26.82 |

| k8s-m3 |

master3 |

172.16.26.83 |

| k8s-n1 |

node1 |

172.16.26.121 |

| k8s-n2 |

node2 |

172.16.26.122 |

| k8s-n3 |

node3 |

172.16.26.123 |

浮动IP(VIP): 172.16.26.80

环境准备

系统配置

安装的初始操作系统为centos7.5桌面版,里面已经包含了一部分的系统自带软件,如wget等, 系统配置需要在所有节点执行

关闭SELinux、防火墙

1

2

3

4

| systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

|

关闭系统的Swap

1

2

3

| swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak |grep -v swap > /etc/fstab

|

网络配置

1

2

3

4

5

6

7

8

| modprobe br_netfilter

echo """

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

""" > /etc/sysctl.conf

sysctl -p

|

内核升级

安装内核依赖包

1

| [ ! -f /usr/bin/perl ] && yum install perl -y

|

升级内核需要使用 elrepo 的yum 源,首先我们导入 elrepo 的 key并安装 elrepo 源

1

2

| rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

|

查看可用的内核

1

| yum --disablerepo="*" --enablerepo="elrepo-kernel" list available --showduplicates

|

最新内核安装

1

2

| yum --disablerepo="*" --enablerepo="elrepo-kernel" list available --showduplicates | grep -Po '^kernel-ml.x86_64\s+\K\S+(?=.el7)'

yum --disablerepo="*" --enablerepo=elrepo-kernel install -y kernel-ml{,-devel}

|

修改内核默认的启动顺序

1

| grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

|

重启后查看当前内核

1

2

| reboot

grubby --default-kernel

|

IPVS

安装IPVS

1

| yum install ipvsadm ipset sysstat conntrack libseccomp -y

|

设置加载的内核模块

1

2

3

4

5

6

7

8

9

| ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

|

k8s参数设置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 10

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.netfilter.nf_conntrack_max = 2310720

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

net.bridge.bridge-nf-call-arptables = 1

vm.swappiness = 0

vm.overcommit_memory=1

vm.panic_on_oom=0

EOF

sysctl --system

|

软件安装

Docker安装

1

2

3

4

5

6

7

8

9

10

11

12

| # 安装docker, 我用的是18.06.3-ce

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

yum makecache fast

#

yum list docker-ce --showduplicates | sort -r

#

sudo yum install docker-ce-<VERSION_STRING>

|

Kubernetes相关组件安装

1

2

3

4

5

6

7

8

9

10

11

| cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet kubeadm kubectl ebtables

|

Keepalived Haproxy安装

(node节点不需要安装)

安装

1

| yum install -y socat keepalived haproxy

|

配置

变量设置

1

2

3

4

5

6

7

8

9

10

11

12

13

| cd ~/

# 创建集群信息文件

echo """

CP0_IP=172.16.26.81

CP1_IP=172.16.26.82

CP2_IP=172.16.26.83

VIP=172.16.26.80

NET_IF=eth0

CIDR=10.244.0.0/16

""" > ./cluster-info

# 配置haproxy和keepeelived

bash -c "$(curl -fsSL https://raw.githubusercontent.com/hnbcao/kubeadm-ha-master/v1.14.0/keepalived-haproxy.sh)"

|

服务开机启动

1

2

3

4

5

6

7

8

9

10

| # 启动docker

sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

systemctl daemon-reload

systemctl enable docker

systemctl start docker

# 设置kubelet开机启动

systemctl enable kubelet

systemctl enable keepalived

systemctl enable haproxy

|

开始部署

master节点初始化

在开始前配置master1到其它节点的免密登陆,简单-略过

初始化master1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| echo """

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: v1.13.0

controlPlaneEndpoint: "${VIP}:8443"

maxPods: 100

networkPlugin: cni

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- ${CP0_IP}

- ${CP1_IP}

- ${CP2_IP}

- ${VIP}

networking:

# This CIDR is a Calico default. Substitute or remove for your CNI provider.

podSubnet: ${CIDR}

---#

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

""" > /etc/kubernetes/kubeadm-config.yaml

kubeadm init --config /etc/kubernetes/kubeadm-config.yaml

mkdir -p $HOME/.kube

cp -f /etc/kubernetes/admin.conf ${HOME}/.kube/config

|

拷贝相关证书到master2、master3

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| for index in 1 2; do

ip=${IPS[${index}]}

ssh $ip "mkdir -p /etc/kubernetes/pki/etcd; mkdir -p ~/.kube/"

scp /etc/kubernetes/pki/ca.crt $ip:/etc/kubernetes/pki/ca.crt

scp /etc/kubernetes/pki/ca.key $ip:/etc/kubernetes/pki/ca.key

scp /etc/kubernetes/pki/sa.key $ip:/etc/kubernetes/pki/sa.key

scp /etc/kubernetes/pki/sa.pub $ip:/etc/kubernetes/pki/sa.pub

scp /etc/kubernetes/pki/front-proxy-ca.crt $ip:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.key $ip:/etc/kubernetes/pki/front-proxy-ca.key

scp /etc/kubernetes/pki/etcd/ca.crt $ip:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key $ip:/etc/kubernetes/pki/etcd/ca.key

scp /etc/kubernetes/admin.conf $ip:/etc/kubernetes/admin.conf

scp /etc/kubernetes/admin.conf $ip:~/.kube/config

ssh ${ip} "${JOIN_CMD} --experimental-control-plane"

done

|

master2、master3加入节点

1

| JOIN_CMD=`kubeadm token create --print-join-command` ssh ${ip} "${JOIN_CMD} --experimental-control-plane"

|

node节点加入

获取节点加入命令

1

| kubeadm token create --print-join-command

|

分别在node上执行获得的命令

集群网络安装

网络插件选择

网络插件安装

遇到的问题

- docker mtu 的配置

配置/etc/docker/daemon.json

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| {

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://axejqb6p.mirror.aliyuncs.com"],

"mtu": 1450,

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}

|

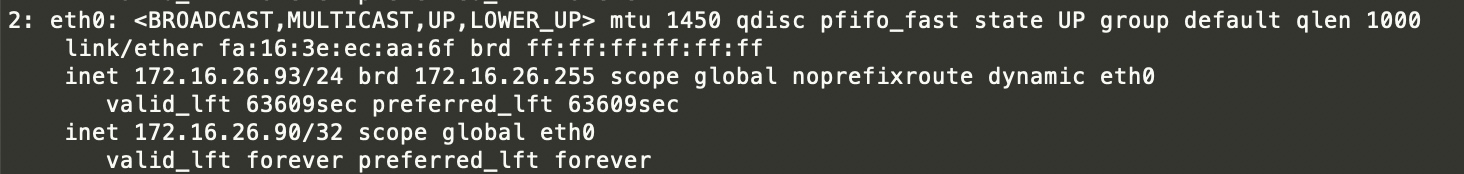

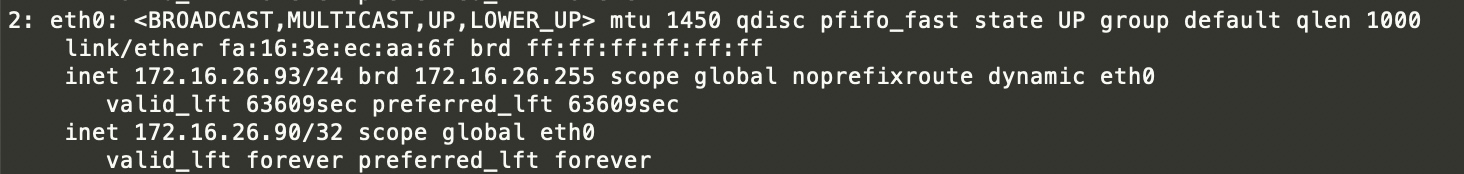

- vip的转移

我当前使用的配置

/etc/haproxy/haproxy.cfg

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

| global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

listen stats

mode http

bind :10086

stats enable

stats uri /admin?stats

stats auth admin:admin

stats admin if TRUE

frontend k8s_https *:8443

mode tcp

maxconn 2000

default_backend https_sri

backend https_sri

balance roundrobin

server master1-api 172.16.26.81:6443 check inter 10000 fall 2 rise 2 weight 1

server master2-api 172.16.26.82:6443 check inter 10000 fall 2 rise 2 weight 1

server master3-api 172.16.26.83:6443 check inter 10000 fall 2 rise 2 weight 1

|

/etc/keepalived/check_haproxy.sh

1

2

3

4

5

6

7

8

9

| #!/bin/bash

VIRTUAL_IP=172.16.26.80

errorExit() {

echo "*** $*" 1>&2

exit 1

}

if ip addr | grep -q $VIRTUAL_IP ; then

curl -s --max-time 2 --insecure https://${VIRTUAL_IP}:8443/ -o /dev/null || errorExit "Error GET https://${VIRTUAL_IP}:8443/"

fi

|

/etc/keepalived/keepalived.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| vrrp_script haproxy-check {

script "/bin/bash /etc/keepalived/check_haproxy.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance haproxy-vip {

state BACKUP

priority 101

interface eth0

virtual_router_id 47

advert_int 3

unicast_peer {

172.16.26.81

172.16.26.82

172.16.26.83

}

virtual_ipaddress {

172.16.26.80

}

track_script {

haproxy-check

}

}

|

参考